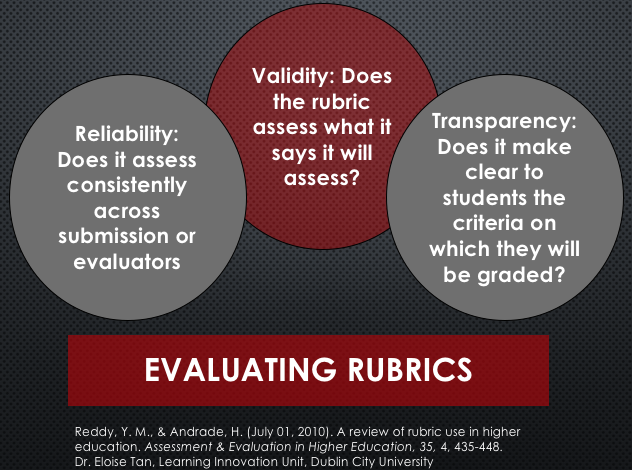

The types of reliability that are most often considered in classroom assessment and in rubric development involve rater reliability. Reliability refers to the consistency of scores that are assigned by two independent raters (inter‐rater reliability) and by the same rater at different points in time (intra‐rater reliability).

The literature most frequently recommends two approaches to inter‐rater reliability: consensus and consistency. While consensus (agreement) measures if raters assign the same score, consistency provides a measure of correlation between the scores of raters.Several studies have shown that rubrics can allow instructors and students to reliably assess performance.

Of the four papers found that discuss the validity of the rubrics used in research, three focused on the appropriateness of the language and content of a rubric for the population of students being assessed. The language used in rubrics is considered to be one of the most challenging aspects of its design.

As with any form of assessment, the clarity of the language in a rubric is a matter of validity because an ambiguous rubric cannot be accurately or consistently interpreted by instructors, students or scorers.

Teaching tips from reliability research: Scorer training is the most important factor for achieving reliable and valid large scale assessments. Before using a rubric, a teaching team should practice grading assignments together to ensure rubric clarity.